So what's it all about?

Many approaches to software testing exist, but few acknowledge the small window of time a software tester has during any build cycle to complete their task.

Adaptive Software Testing Approach (ASTA) is a collection of ways to apply software testing to make the best use of the time allocated to testing on any given project. It ensures we assess risk, test early when required, apply testing in a lightweight form when required, and perform highly scripted testing when required. This website aims to give the reader a general overview of ASTA.

The origin of Adaptive Software Testing Approach

As testers we have a responsibility to check the quality of software. We have a responsibility to make the best use of our time and, to report what we find to parties invested in the quality of the product (developers, product owners etc) in a way which is clear and concise. We also have the respnsibility to report test metrics to management, to give them an easy to interpret overall view of the quality of the product.

In my many years as a tester and test manager I have used various approaches, worked within many software delivery methodologies, and observed and tried what works and what doesn't, when it comes to delivering testing. These observations and experience are what I have used to formulate the Adaptive Software Testing Approach.

The purpose of this website is to share information of this approach. I hope that the clear and concise approach to testing tasks presented by ASTA will serve you well.

Giving a tester autonomy & making the best use of a testers time

A tester should be given the autonomy to decide how they will test. We are employed to make decisions and trusted to execute them properly.

ASTA means each testing task is approached individually, based on risk and time constraints.

For example: the testing of a small 1 line change can be acknowledged with comment on the ticket and a small list of scenarios covered. But a large new feature will require the tester to put more consideration in to how to test, and to estimate the time it will take to complete testing.

If test cases are required ahead of time for a testing task and it feels comfortable to write them - write them (usually where complex functionality will exist.)

If time constraints mean full tests can't be written, it makes more sense to write 'throw away' scripts which cover the main functionality and guide you to open up testing around the main functionality.

If the nature of the project means the AUD needs to be explored, because documentation or understanding on expected behaviour is limited (proof of concepts, prototypes) exploratory testing should be applied.

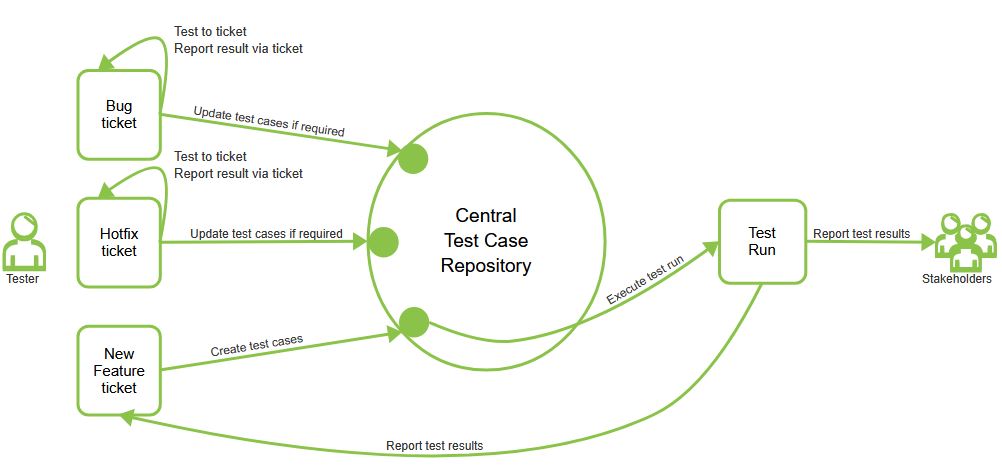

The central repository

At the core of ASTA is the central repository. Alongside the daily testing activities a central repository of test cases must be maintained which cover all functionality. The test cases within can then be automated (based on priority), used to guide a manual regression test cycle, report coverage to stakeholders and guide new team members to learn the product. The central repository can also serve as a resource to document the expected behaviour of the application by way of test cases - this is especially beneficial in a prototyping environment.

Putting ASTA in to practice.

Depending on your team structure ASTA is most efficient when 2 testers are working in tandem - with 1 tester covering the analysis and manual tasks, the other covering automation.

I can't stress enough the benefits of having 2 testers looking over your product with a fine tooth comb daily.

ASTA can be applied when a project has a sole tester, the process differs slightly to the 2 tester approach.

Here is an example of what a single testers day may look like when ASTA is applied within a SCRUM project:

That's all for now

This website aims to give the reader a general overview of ASTA

For more detailed information on how to apply ASTA my consulting services are available.